Why we need agreement on Definition of Done”(Done-Done) in Agile development with Scrum?

Primary purpose of agile project is to deliver value at the end of each sprint and release. Agile teams’ members should have common shared understanding of What is a quality deliverable ?

“Definition of Done” for iteration, releases and for every user story or feature makes that shared understanding clear and measurable to all team members. On papers, it is an audit checklist which drives the quality completion of a agile activity. The entire process of creating, maintaining, reviewing, updating and making the required process, practice and technology changes in agile team to enable Done-Done helps in establishing right standards and benchmarks for continuous improvements.

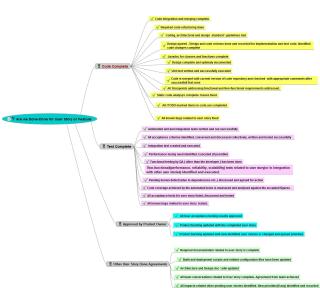

I have created a Mind map for listing the “Done-Done” items for User story and End of iteration. Agile team will brainstorm, identify, evaluate and create their Done-Done checklist. This is to help and guide the team in the process.

Done-Done for ‘User Story’ Mind Map

|

| Done Done for User Story Mind Map |

Done-Done for ‘End of Iteration’ (Sprint) Mind Map

|

| Done Done for Iteration Mind Map |

Done-Done for User Story or Feature Checklist Points

Code Complete

- Code integration and merging complete.

- Required code refactoring done

- Coding, architectural and design standard \ guidelines met

- Design agreed – Design and code reviews done and recorded for implementation and test code. Identified code changes complete

- Javadoc for classes and functions complete

- Design complete and optimally documented

- Unit test written and successfully executed

- Code is merged with current version of code repository and checked with appropriate comments after successful test runs

- All Story points addressing functional and Non-functional requirements addressed.

- Static code analysis complete. Issues fixed.

- All //TODO marked items in code are completed

- All known bugs related to user story fixed

Test Complete

- Automated unit and integration tests written and run successfully

- All acceptance criteria identified, conversed and discussed collectively, written and tested successfully

- Integration test created and executed

- Performance testing need identified. Executed (if possible)

- Functional testing by QA ( other than the developer ) has been done

- Non-functional (performance, reliability, scalability) tests related to user story(or in integration with other user stories) identified and executed.

- Pending known defects(due to dependencies etc..) discussed and agreed for action

- Code coverage achieved by the automated tests is measured and analysed against the accepted figures

- All acceptance tests for user story listed, discussed and tested

- All known bugs related to user story tested.

Approved by Product Owner

- All User acceptance testing results approved

- Product backlog updated with the completed user story

- Product backlog updated with new identified user stories or changed and agreed priorities

Other User Story Done Agreements

1. Required documentation related to user story is complete

2. Build and deployment scripts and related configuration files have been updated

3. Architecture and Design doc \ wiki updated

4. All team conversations related to User story complete. Agreement from team achieved

5. All impacts related other pending user stories identified. New priorities (if any) identified and recorded

Done-Done for Iteration (Sprint) Checklist Points

All User Stories\feature Code Complete

1. Code integration and merging complete.

2. Required code refactoring done.

3. Coding, architectural and design standard \ guidelines met.

4. Design agreed – Design and code reviews done and recorded for implementation and test code. Identified code changes complete .

5. Javadoc for classes and functions complete

6. Design for all user stories complete.

7. Unit test written and successfully executed.

8. Code is merged with current version of code repository and checked with appropriate comments after test runs.

9. All Story points addressing functional and Non-functional requirements addressed.

10. Static code analysis complete. Issues fixed.

11. All //TODO items in code are completed.

12. Code is deployment-ready. This means environment-specific settings are extracted from the code base.

13. All known bugs for the user stories fixed.

Test Complete for all Users Stories and other Iteration items

1. Automated unit and integration test written and run successfully for all iteration items.

2. All Acceptance criteria’s identified, conversed and discussed collectively, Written and tested successfully.

3. Integration test created and executed.

4. Deployed to system test environment and passed system tests

5. Non-functional (performance, reliability, scalability etc..) tests related to user stories( in integration with other user stories) identified and executed.

6. Functional testing by QA (other than the developer) has been done.

7. Pending known defects (due to dependencies etc..) discussed and agreed for action.

8. Code coverage achieved by the automated tests is measured and analyzed against the accepted numbers.

9. All acceptance tests for user story listed, discussed and tested

10. Compliance with standards (if any) verified.

11. All known bugs tested.

12. Build deployment and testing in staging environment complete.

Design and Architecture Reviews

- Architecture and Design enhancements discussed.

- Architecture and Design decisions documented.

- Stakeholders (Developers, Product Owners, and Architects etc) communicated about the updates.

- Impacts of Architecture and Design updates on other user stories identified and recorded.

Sprint Retrospection Complete

- Sprint retrospection meeting complete.

- Progress on last retrospection actions reviewed.

- Definition of Done-Done reviewed. Updates (if any) done.

- Action plan created out of retrospected items. Responsibilities owned.

Documentation

- Code coverage reports available.

- Required Documentation related to all user stories is complete.

- Product backlog updated.

- Code reviews records available.

- Release notes available.

- Architecture and Design documents updated.

- Documentation change requests for pending items filed and fixed(if Documentation team member is part of the scrum team).

- Project management artifacts reviewed and updated.

Demonstrable

- Sprint items ready for demonstrations.

- Sprint demonstrations for all user stories conducted for stakeholders.

- Feedback reviewed and recorded for planned actions – Enhancement change requests or Bugs filed.

- Stakeholder (Product Owner in particular) explicitly Signs-Off the Demonstration.

Build and Packaging

- Build scripts and packaging changes fixed, versioned, communicated, implemented, tested and documented.

- Build through Continuous integration – Automated build, testing and deployment.

- Build release notes available.

- Code change log report has been generated from code repository.

Product Owner Tasks

- User Acceptance tests passed for all user stories. Accepted by Product owner.

- Product backlog updated with the all completed user stories.

- Newly identified user stories or other Technical debts items updated in the product backlog.

Other Tasks Complete

- All tasks not identified as user stories(Technical Debts) completed.

- IT and Infrastructure updates complete.

- Stakeholders communicated about the iteration release details.

- All sprint user stories demonstrations reviewed and signed off by product owner.

Good checklist

LikeLike

Good checklist

LikeLike

Very good checklist. Have you gotten any feedback from teams that have used this? I was wondering how teams might have tailored the list.Bob Boydhttp://implementingagile.blogspot.com.au/

LikeLike

Very good checklist. Have you gotten any feedback from teams that have used this? I was wondering how teams might have tailored the list.Bob Boydhttp://implementingagile.blogspot.com.au/

LikeLike

Yes, scrum teams have found this checklist extremely useful. This checklist provided a structure to the teams own efforts to define their own DONE-DONE definitions. DONE-DONE definitions for teams will evolve based on their intrinsic project needs. The checklist provided a basic guidelines to explore.

LikeLike

Yes, scrum teams have found this checklist extremely useful. This checklist provided a structure to the teams own efforts to define their own DONE-DONE definitions. DONE-DONE definitions for teams will evolve based on their intrinsic project needs. The checklist provided a basic guidelines to explore.

LikeLike